Testing Generative AI Agent Applications

(Previous Title: Achieving Confidence in Enterprise AI Agent Applications)

I am an enterprise developer; how do I test my AI agent applications??

I know how to test my traditional software, which is deterministic (more or less…), but I don’t know how to test my AI agent applications, which are uniquely stochastic, and therefore nondeterministic.

Welcome to the The AI Alliance project to advance the state of the art for Enterprise Testing of Generative AI Agent Applications. We are building the knowledge and tools you need to achieve the same testing confidence for your AI applications that you have for your traditional applications.

Note:

This site isn’t about using AI to generate conventional tests (or code). You can find many online resources about that topic. Instead, this site focuses on the problem of how to do testing of any kind when an application contains generative AI components, given the nondeterminism they introduce.

The Challenge We Face

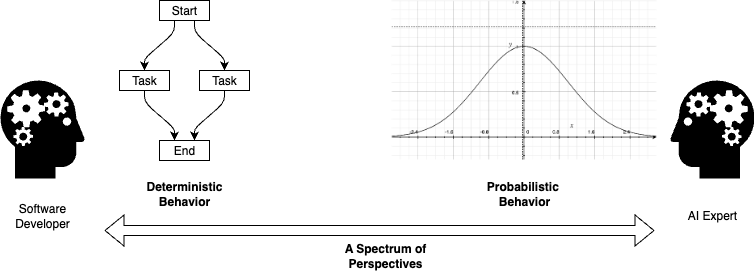

We enterprise software developers know how to write Repeatable and Automatable tests. In particular, we rely on Determinism when we write tests to verify expected Behavior and to ensure that no Regressions occur as our code base evolves. Why is determinism a key ingredient? We know that if we pass the same arguments repeatedly to most Functions (with some exceptions), we will get the same answer back consistently. This property enables our core testing techniques, which give us the essential confidence that our applications meet our requirements, that they implement the Use Cases our customers expect. We are accustomed to unambiguous pass/fail answers!

Problems arise when we introduce Generative AI Models, where generated output is inherently Stochastic, meaning the outputs are governed by a probability model, and hence nondeterministic. We can’t write the same kinds of tests now, so what alternative approaches should we use instead? The problems are compounded when we have applications built on Agents, each of which will have some stochastic behavior of its own, if it encapsulates a generative model.

In contrast, our AI-expert colleagues (researchers and data scientists) use the tools of Probability and Statistics to analyze stochastic model responses and to assess how well the models perform against particular objectives. For example, a model might score 85% on a Benchmark for high school-level mathematical knowledge. Is that good enough? It depends on the application in mind! Rarely are simple pass/fail answers available.

Figure 1: The spectrum between deterministic and stochastic behavior, and the people accustomed to them!

We have to bridge this divide. As developers, we need to understand and adapt these data science tools for our needs. This will mean learning some probability and statistics concepts, but we shouldn’t need to become experts. Similarly, our AI-expert colleagues need to better understand our needs, so they can help us take their work and use it to deliver reliable, trustworthy, AI-enabled products.

Project Goals

The goals of this project are two fold:

- Develop and document strategies and techniques for testing generative AI applications that eliminate nondeterminism, where feasible, and where not feasible, still allow us to write effective, repeatable and automatable tests. This work also impacts architecture and design decisions.

- Publish detailed, reusable examples and guidance for developers and AI experts on these strategies and techniques.

TODO:

This user guide is a work in progress. You will find a number of ideas we are exploring and planned additions indicated as TODO items. See also the project issues and discussion forum. We welcome your feedback and contributions.

Overview of This Site

Tips:

- Use the search box at the top of any page to find specific content.

- Capitalized Terms link to glossary definitions.

- Most chapters have a Highlights section at the top that summarizes the key takeaways from that chapter.

- Many chapters end with an Experiments to Try section for further exploration.

- This AI Alliance blog post summarizes the motivation for this project.

We start with a deeper dive into The Problems of Testing Generative AI Agent Applications.

Then we discuss Architecture and Design concepts that are informed by the need for effective testing to ensure our AI applications are reliable and do what we expect of them. We explore how tried and true principles still apply, but updates are often needed:

- Test-Driven Development: TDD is really a design methodology as much as a testing discipline, despite the name, promoting incremental delivery and iterative development. What tools are provided by TDD for attacking the AI testing challenge? What do AI-specific tests look like in a TDD context?

- Component Design: How do classic principles of coupling and cohesion help us encapsulate generative AI behaviors in ways that make them easier to develop, test, and integrate into whole systems?

With this background on architecture and design principles, we move to the main focus of this site, Testing Strategies and Techniques that ensure our confidence in AI-enabled applications:

- Unit Benchmarks: Adapting Benchmark techniques, including synthetic data generation, for Unit Testing and similarly for Integration Testing and Acceptance Testing.

- LLM as a Judge: Using a “smarter” LLM to judge generative responses, including evaluating the quality of synthetic data.

- External Tool Verification: Cases where non-LLM tools can test our LLM responses.

- Statistical Evaluation: Understanding the basics of statistical analysis and how to use it assess test and benchmark results.

- Lessons from Systems Testing: Testing at the scale of large, complex systems is also less deterministic than in the context of Unit Tests, etc. What lessons can we learn here?

- Testing Agents: Agents are inherently more complex than application patterns that use “conventional” code wrapping invocations of LLMs. Agents are evolving to be more and more autonomous in their capabilities, requiring special approaches to testing. This chapter explores the requirements and available approaches.

The final section is more speculative. It considers ways that generative AI might change software development, and testing specifically, in more fundamental ways:

- Specification-Driven Development: Building on the idea of eliminating source code, can we specify enough detail using human language (e.g., English) to allow models to generate and validate whole applications?

- Can We Eliminate Source Code? Computer scientists have wondered for decades why we still program computers using structured text, i.e., programming languages. Attempts to switch to alternatives, such as graphical “drag-and-drop” environments, have failed (with a few exceptions). Could generative AI finally eliminate the need for source code?

- From Testing to Tuning: Our current approach to testing is to use tests to detect suboptimal behavior, fix it somehow, then repeat until we have the behavior we want. Can we instead add an iterative and incremental model tuning process that adapts the model to the desired behavior automatically?

Throughout this guide, we use a healthcare ChatBot example. A Working Example summarizes all the features discussed for this example.

Finally, there is a Glossary of Terms and References for additional information.

How to Use This Site

This site is designed to be read from beginning to end, but who does that anymore?? We suggest you at least skim the content that way, then go to areas of particular interest. For example, if you already know Test-Driven Development, you could read just the parts that discuss what’s unique about TDD in the AI context, then follow links to other sections for more details.

Help Wanted!

This is very much a work in progress. This content will be updated frequently to fill in TODO gaps, as well as updates to our current thinking, emerging recommendations, and reusable assets. Your contributions are needed and most welcome!

See also About Us for more details about this project and the AI Alliance.

Additional Links

- This project’s GitHub Repo (see also issues and the discussion forum)

- Related projects:

- The AI Trust and Safety User Guide: General guidance for evaluating AI applications for safety and trustworthiness.

- Evaluation Is for Everyone: Understanding evaluation tools for benchmarks, such as how they work and how to use them, etc.

- Evaluation Reference Stack: An example stack of evaluation tools.

- The AI Alliance: